Automated Testing at Zaleos

Automated testing has been increasing significantly among developers over the past years, especially with the introduction of Agile methodologies and Continuous Integration practices. Some people could think that it’s a hype, that is doing a work that the client didn’t ask, that is a waste of time… But, at Zaleos we truly believe is a must. What about you?

Why we need tests

- To prove that your code works

This is the main reason to have Unit tests. They prove that your code works. If you don’t write them you’ll leave a potentially buggy code behind. Additionally, functional tests prove that your software meets its requirements. - To change the code with less fear

That’s a great sensation when you feel you have a good safety net when doing your modifications. In case you modify your code you can easily identify if you broke any functionality and avoid that error. That is key to refactor your code. - To control the technical debt

Yes, the most you can refactor the best code you will have. Additionally, if something it’s very hard to test looks like there is a bad design smell there, so you can fix that. Testing is going to help you to minimize the technical debt because is enforcing you to make better software. - To reduce the time spent debugging

We all know debugging is a heavy time-consuming task. We should avoid it as much as we can. An early detection of bugs, especially in unit tests, will help in that case. - To document the expected behavior

Sometimes when we dig in a new code we like to check the tests first, because they give us a higher level idea about what the source code is doing (or trying to do). You can easily identify relations between objects, detect external dependencies and much more by doing it that way. - To enable metrics like code coverage

This is a really good measure of the health of our tests, but we want to make a warning here: Don’t force coverage in your tests! Don’t forget that our target is to avoid errors, not to have a great code coverage.

How tests should be

- Fully Automated: So we can focus on more important task than running tests

- Self-Checking: So there is no need to review the tests results in depth, they tell you when things gone wrong

- Repeatable: We want deterministic tests, so we can run them many times with exactly the same results without any human intervention between runs.

- Simple: Test one thing at a time. This helps to find the location of a bug faster.

- Expressive: That helps other developers to not duplicate tests. This also enables to use tests as Documentation.

- Separated from the production code: Test should reduce risk, not increase it. We have to be careful to make sure we don’t introduce new potential problems into the production code.

Where tests should be

A “terrifying” storyWe remember a story from a friend. He told us they presented to the client a project plan. In this plan, they included some hours for testing, for TDD he told. Probably this wasn’t a smart approach. The client told them to remove these hours from the project (they wouldn’t pay that). The client representative claimed, “If there is any problem, you will fix it for free in the warranty period”.

What this guy doesn’t understand is that testing is not for finding bugs, testing is for avoiding bugs.

We don’t like too much to compare software development with building houses, but imagine we are asked to build a wall. Someone could think that testing is equivalent to clean after building the wall, a final phase. In our opinion, testing is like applying the cement that keeps the bricks together. Yes, you can build the wall without cement and probably is going to be cheaper, at first, but when the client will try to place a picture on the wall…

The theory is great but, what are you really doing?

Unit tests

We have several unit tests in our products. Depending on the technology used in that product we use different tools. For Java we have Junit, pytest for Python, testing package in Golang. Additionally, we have our SonarQube instance to track the quality of the code.

All these tests are run by our CI server on each commit we make. If something goes wrong we receive a Slack notification and current branch merging is blocked until all the unit tests are passed successfully. This way we don’t waste our time running repetitive tasks, nonetheless we always have the possibility of running them locally if we want.

Functional tests

We don’t like the abuse of functional tests. We know they are slower and harder to maintain, but they are our second defense line. We run these tests always prior releasing a product.

In some cases like Open Source SIP Servers based products, we don’t have a good unit test framework. Luckily for this case, we have SIPp. SIPp is a tool to generate SIP traffic where you can add assertions to SIPmessages received in order to check a header content, body content, type of message and time to receive a message. This tool allows you to build your scenario, generate your custom messages and checks, but it requires a running product so we consider that a functional test.

Additionally, we have some applications implementing an API REST. No problem, there are tools like Smartbear’s SoapUI that helps us to run our test cases asserting the responses. Luckily there are a lot of people that identified the need to test faster and easier and they are developing great tools.

But not everything is already done. We also developed some tools and scripts to have automated functional tests. Some of these tools can be run in Docker containers inside our CI process. That’s great because the developer doesn’t need to care about neither the infrastructure nor other details outside the functionality that he/she is implementing, which is what he/she really wants to do!

Load tests

Ok, at this point the functionality does the job. All edge cases are under control, bad user input is handled, the main scenario is working perfectly, but, to be sure that it’s valuable we need to ensure non-functional requirements are met too.

Here we are aware of that, and we have different tools to do Load Testing. Again, we can run SIPp to create multiple calls per second and run the number of calls we consider so we are covered for products that implement SIP protocols.

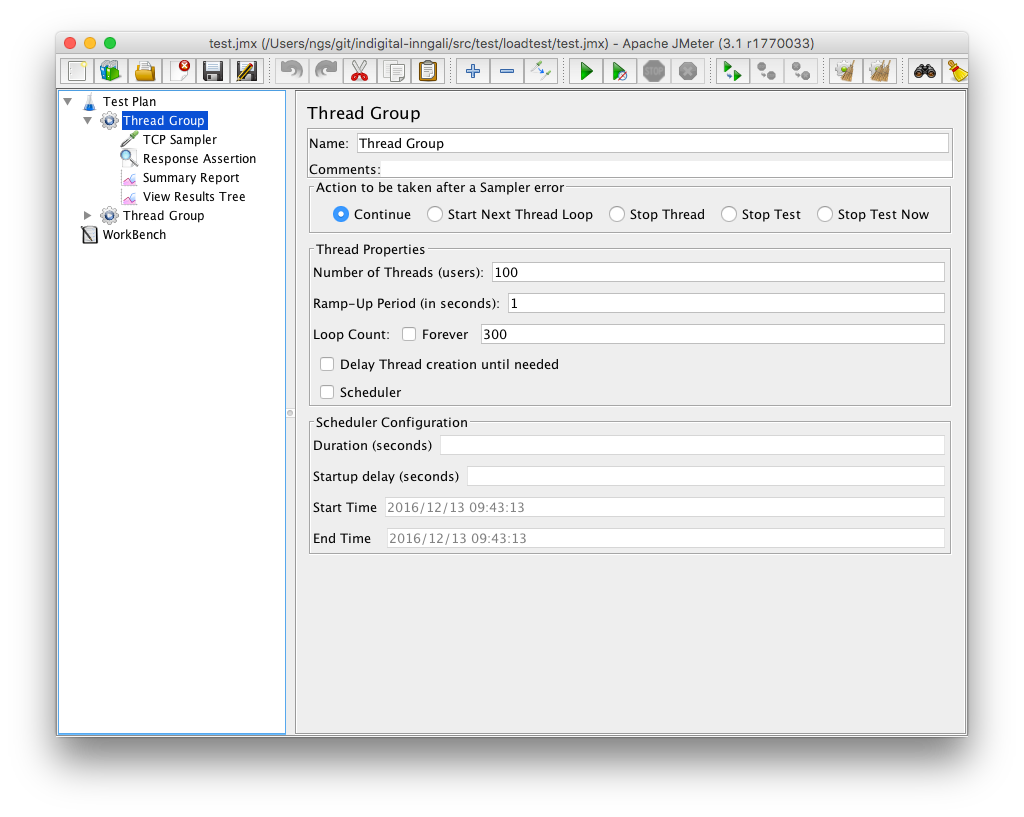

For other cases we have Jmeter. This is a Swiss-knife tool for load testing, that enables you to load almost any existing application/server/protocol and generate a custom report. It’s very easy to define the input you want to use to generate test data, randomize it, configure the number of threads to use, extract data from the responses, make assertions on responses, run custom commands, etc.

Extra ball: Testing documentation

At this point we know testing the source code it’s great. So, why stopping here? The source code is not the only asset that we are in charge of. Documentation is a highly valuable asset, and probably the first place where people, who are new to the product, will go. If the first impression is bad, that can cause a product to be rejected even if it’s technically impressive.

So we decided to create our own tool to validate the documentation we generate, checking at least that the format is perfect and it doesn’t contain any unwanted element. This can be easily done if you use a markup language like reStructuredText or Markdown to generate your documentation.

Conclusion

Don’t let anyone say to you that testing can be done by another specific team, or can be done after releasing. You must do it with your implementation (or even before). If you don’t, your software won’t be sustainable and you will be on the technical-debt hole soon. Automated testing protects you against mistakes and it’s the only way to build consistent and quality software. It will definitely pay off.

Try to automate all your tests using a CI tool. Running manual tests is painful and unproductive. Just run manual tests when there is no other viable option (and don’t forget to run them!).

We hope you like the way we are doing testing at Zaleos. Anyway, we will continue improving! :-)

References:

- Vincent Massol and Ted Husted. 2003. Junit in Action. Manning Publications Co., Greenwich, CT, USA.

- xUnit Patterns.

- José San Román A. de Lara. 2017. Do you have a #bug?Your unit tests are not well planned. Codemotion Madrid 2017.

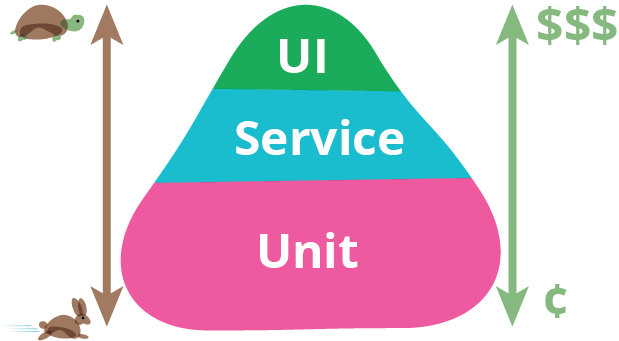

- Fowler, M. (2012, May 1). TestPyramid. Retrieved from https://martinfowler.com